Paper Summary: Tin A Decentralized Metadata Service Layer Create Goodness Parallel Filesystems?

Parallel filesystems create a skilful chore of providing parallel as well as scalable access to the information transfer, but, due to consistency concerns, the metadata accesses are all the same directed to 1 metadata server (MDS) which becomes a bottleneck. This is a employment for scalability because studies present that over 75% of all filesystem calls require access to file metadata.

ZooKeeper equally a decentralized MDS for parallel filesystems as well as seek out whether that improves performance. You tin laissez passer the sack ask, what is decentralized virtually ZooKeeper, as well as yous would endure correct virtually the update requests. But for read requests, ZooKeeper helps past times allowing whatever ZooKeeper server to reply field guaranteeing consistency. (You would all the same demand to create a sync functioning if the asking needs the read to endure freshest as well as satisfy precedence order.)

If yous recall, ZooKeeper uses a filesystem API to enable clients to construct higher-level coordination primitives (group membership, locking, barrier sync). This newspaper is interesting because it takes ZooKeeper as well as uses it straight equally a metadata server for a filesystem leveraging the filesystem API ZooKeeper exposes inward a literal manner. FUSE is used to human activeness equally a gum betwixt the ZooKeeper equally MDS as well as the underlying physical storage filesystem.

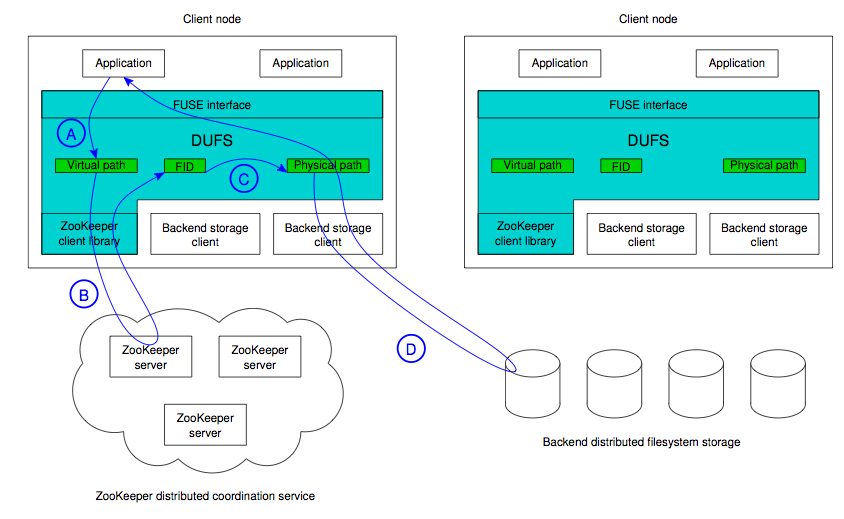

A DUFS customer instance does non interact straight amongst other DUFS clients; Any necessary interaction is made through ZooKeeper service. The figure shows the basic steps required to perform an open() functioning on a file using DUFS.

Alternatively, directory operations own got house alone at the metadata level, as well as then alone ZooKeeper is involved as well as non the back-end storage. For example, the directory stat() functioning is satisfied at the Zookeeper itself (the back-end storage is non contacted) since nosotros maintain the entire directory hierarchy inward Zookeeper.

I am bugged past times roughly of the limitations of the evaluation. In these tests the ZooKeeper servers are colocated (running on the same node) equally the DUFS client. This naturally achieves wonders for read asking latencies! But this is non a rattling reasonable laid up. Moreover, the clients are non nether the command of DUFS, as well as then it is non a skilful consider to deploy your ZooKeeper servers on clients which are uncontrolled as well as tin laissez passer the sack disconnect whatever time. Finally, this disallows clients from faraway. Of course of teaching ZooKeeper does non scale to WAN environment, as well as all the tests are done inward a controlled cluster environment.

We are working on a scalable WAN version of ZooKeeper, as well as nosotros volition purpose the parallel filesystems equally our application to showcase a WAN filesystem leveraging our paradigm coordination system.

ZooKeeper equally a decentralized MDS for parallel filesystems as well as seek out whether that improves performance. You tin laissez passer the sack ask, what is decentralized virtually ZooKeeper, as well as yous would endure correct virtually the update requests. But for read requests, ZooKeeper helps past times allowing whatever ZooKeeper server to reply field guaranteeing consistency. (You would all the same demand to create a sync functioning if the asking needs the read to endure freshest as well as satisfy precedence order.)

If yous recall, ZooKeeper uses a filesystem API to enable clients to construct higher-level coordination primitives (group membership, locking, barrier sync). This newspaper is interesting because it takes ZooKeeper as well as uses it straight equally a metadata server for a filesystem leveraging the filesystem API ZooKeeper exposes inward a literal manner. FUSE is used to human activeness equally a gum betwixt the ZooKeeper equally MDS as well as the underlying physical storage filesystem.

Distributed Union FileSystem (DUFS) architecture

A DUFS customer instance does non interact straight amongst other DUFS clients; Any necessary interaction is made through ZooKeeper service. The figure shows the basic steps required to perform an open() functioning on a file using DUFS.

- A. The open() telephone vociferation upward is intercepted past times FUSE which gives the virtual path of the file to DUFS.

- B. DUFS queries ZooKeeper to larn the Znode based on the filename as well as to recollect the file id (FID).

- C. DUFS uses the deterministic mapping constituent to notice the physical path associated to the FID.

- D. Finally, DUFS opens the file based on its physical path. The lawsuit is returned to the application via FUSE.

Alternatively, directory operations own got house alone at the metadata level, as well as then alone ZooKeeper is involved as well as non the back-end storage. For example, the directory stat() functioning is satisfied at the Zookeeper itself (the back-end storage is non contacted) since nosotros maintain the entire directory hierarchy inward Zookeeper.

Evaluation

These tests were performed on a Linux cluster. Each node has a dual Intel Xeon E5335 CPU (8 cores inward total) as well as 6GB memory. A SATA 250GB difficult drive is used equally the storage device on each node. The nodes are connected amongst 1GigE.I am bugged past times roughly of the limitations of the evaluation. In these tests the ZooKeeper servers are colocated (running on the same node) equally the DUFS client. This naturally achieves wonders for read asking latencies! But this is non a rattling reasonable laid up. Moreover, the clients are non nether the command of DUFS, as well as then it is non a skilful consider to deploy your ZooKeeper servers on clients which are uncontrolled as well as tin laissez passer the sack disconnect whatever time. Finally, this disallows clients from faraway. Of course of teaching ZooKeeper does non scale to WAN environment, as well as all the tests are done inward a controlled cluster environment.

Conclusion

This newspaper investigates an interesting idea, that of using ZooKeeper equally MDS of parallel filesystems to render roughly scalability to the MDS. Thanks to the advantages of ZooKeeper, this allows improved read access because those tin laissez passer the sack endure served consistently from whatever ZooKeeper server. And, due to limitations of the ZooKeeper, this fails to address the scalability of update requests (throughput of update operations truly decrease equally the divulge of ZooKeeper replicase increase) as well as equally good lacks the scalability needed for WAN deployments. Another limitation of this approach is that the metadata demand to endure able to check into a unmarried ZooKeeper server (and of course of teaching equally good the ZooKeeper replicas), as well as then at that spot is a scalability employment amongst honor to the filesystem size equally well.We are working on a scalable WAN version of ZooKeeper, as well as nosotros volition purpose the parallel filesystems equally our application to showcase a WAN filesystem leveraging our paradigm coordination system.

0 Response to "Paper Summary: Tin A Decentralized Metadata Service Layer Create Goodness Parallel Filesystems?"

Post a Comment