Retroscope: Retrospective Monitoring Of Distributed Systems (Part 2)

This post, business office 2, focuses on monitoring distributed systems using Retroscope. This is a articulation post service alongside If y'all are unfamiliar alongside hybrid logical clocks together with distributed snapshots, give business office 1 a read first.

Monitoring together with debugging distributed applications is a grueling task. When y'all demand to debug a distributed application, y'all volition oftentimes endure required to carefully examine the logs from dissimilar components of the application together with endeavour to figure out how these components interact alongside each other. Our monitoring solution, Retroscope, tin aid y'all alongside aligning/sorting these logs together with searching/focusing on the interesting parts. In particular, Retroscope captures a progression of globally consistent distributed states of a organisation together with allows y'all to examine these states together with search for global predicates.

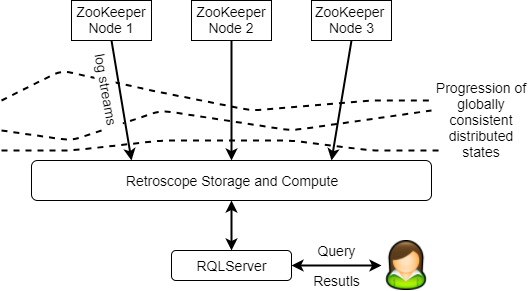

Let’s say y'all are working on debugging ZooKeeper, a pop coordination service. Using Retroscope y'all tin easily add together instrumentation to the ZooKeeper nodes to log together with current events to the Retroscope Storage together with Compute module. After that, y'all tin role RQL, Retroscope's elementary SQL-like interrogation language, to procedure your queries on those logs to reveal the predicates/conditions that concur on the globally consistent cuts sorted inwards Retroscope's compute module.

Let's say y'all desire to examine the staleness of the information inwards the ZooKeeper cluster (since ZooKeeper allows local reads from a unmarried node, a customer tin read a stale value that has already been updated). By adding instrumentation to rail the versions of znodes (the objects that proceed data) at each ZooKeeper node, y'all tin monitor how a detail znode changes across the entire cluster inwards a progression of globally consistent cuts alongside a real curt query: "SELECT vr1 FROM setd;", where setd is the mention of the log keeping rail of changes together with vr1 is version of a znode r1.

The higher upwards illustration silent requires y'all to sift through all the changes inwards znode r1 across a progression of cuts to run across whether at that spot are stale values of r1 inwards unopen to of the cuts. Instead, y'all tin modify the interrogation to direct hold it render alone globally consistent cuts that incorporate stale versions of the znode: "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". RQL treats dissimilar versions of the variable vr1 that be on diverse nodes equally a set, allowing y'all to write the queries exploring such sets. With this expression, Retroscope computes the departure betwixt znode r1’s versions across whatsoever 2 nodes for all possible combinations of nodes, together with if the departure is 2 or more, Retroscope emits the cut. This interrogation volition give y'all a precise cognition close global states inwards which znode differs past times at to the lowest degree 2 versions betwixt nodes inwards the system.

Let's say y'all got curious close the displace for the staleness inwards unopen to of the ZooKeeper nodes. By including to a greater extent than information to endure returned inwards each cut, y'all tin show diverse hypotheses close the causes of staleness. For instance, if y'all suspect that unopen to ZooKeeper nodes to endure disproportionally loaded past times clients, y'all tin show it alongside a interrogation that returns a release of active customer connections: "SELECT vr1, client_connections FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". And y'all tin farther refine your interrogation to filter staleness cases to when imbalance inwards customer connections is high: "SELECT vr1, client_connections FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2) AND EXISTS c1, c2 IN client_connections: (c1 – c2 > 50)".

To proceed the overhead equally depression equally possible for the organisation beingness monitored, nosotros augment the target organisation nodes alongside alone a lightweight RetroLog server module to extract/forward logs to a dissever Retroscope storage/compute cluster for monitoring. RetroLogs employ Apache Ignite’s streaming capabilities to deliver the logs to the Retroscope storage/compute cluster. RetroLogs also render Hybrid Logical Clock (HLC) capabilities to the target organisation beingness monitored. HLC is an essential constituent of Retroscope, equally it allows the organisation to identify consistent cuts. The logging business office is similar to how regular logging tools work, except the values beingness logged are non exactly messages, but key-value pairs alongside the fundamental beingness the variable beingness monitored together with the value being, well, the electrical current value of the variable.

The architecture figure higher upwards shows the insides of the Retroscope storage/compute cluster implemented using Apache Ignite. As the RetroLog server current logs to Ignite cluster for aggregation together with storage, the Ignite streaming breaks messages into blocks based on their HLC time. For instance, all messages betwixt timestamps 10 together with xx volition endure inwards the same block. The Ignite Data Grid thence stores the blocks until they are needed for computing the past times consistent cuts.

The RQLServer acts equally the interrogation parser together with business scheduler for our monitoring tool. It breaks downwardly the duration of state-changes over which a interrogation needs to endure evaluated into non-overlapping time-shards together with assigns these time-shards to worker nodes for evaluation. As workers procedure through their assignments, they emit the results dorsum to the RQLServer which thence presents the consummate output to you.

Let's straight off zoom inwards to the Retroscope workers. These workers are designed to search for predicates through a sequence of global distributed states. The search ever starts at unopen to known state together with progresses frontward i state-change at a time. (Each time-shard has its starting state computed earlier beingness assigned to the workers. This procedure is done alone once, equally time-shards are reused betwixt dissimilar queries. When workers perform the search, they ever start at the time-shard's starting state together with progress frontward i state-change at a time.) As the worker advances frontward together with applies changes, it arrives to novel consistent cuts together with evaluates the interrogation predicate to create upwards one's heed if the cutting must endure emitted to the user or not. The figure illustrates running "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)" interrogation on a ZooKeeper cluster of iii nodes. Each fourth dimension a variable's value is changed, it is beingness recorded into a Retroscope log. As the systems starts to search for the global states satisfying the interrogation condition, it get-go looks at state c0. At this cutting the departure betwixt versions on Node iii together with Node 1 is 2, pregnant that the worker emits the cutting back. The organisation thence moves frontward together with eventually reaches cutting c5 at which the staleness is alone 1 version, thence c5 is skipped. The search ends when all consistent cuts are evaluated this way. In the example, y'all volition run across cuts c0, c1, c2, c3, c4 together with c6 beingness emitted, piece cutting c5 direct hold pocket-size staleness together with does non satisfy the status of the query.

We started past times looking at a normal running ZooKeeper cluster on AWS together with ran a show workload of 10,000 updates to either a unmarried znode or 10 znodes. All the updates were done past times a unmarried synchronous customer to brand certain that the ZooKeeper cluster is non saturated nether a heavy load. After the updates are over, nosotros ran a elementary retrospective RQL interrogation to recall consistent states at which values direct hold been changing inwards znode r1: "SELECT vr1 FROM setd;", where setd is the mention of the log keeping rail of changes together with vr1 is version of znode r1. This interrogation produces lots of cuts that must endure examined manually, silent a quick glance over it shows that a staleness of 1 version is common. This is a normal behavior, equally Retroscope was capturing staleness of 1 version correct subsequently the value has been committed, together with the commit messages may silent endure inwards flying to the follower nodes.

To search whether at that spot exists whatsoever cases where staleness is 2 versions or greater, nosotros thence evaluated the next query: "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". With this interrogation nosotros learned that past times targeting a unmarried znode, nosotros were able to abide by a staleness of 2 or to a greater extent than versions on close 0.40% of consistent cuts examined. Surprisingly, nosotros also observed a spike of nine versions inwards staleness at i cut, silent the stale node was thence able to rapidly grab up. (Probably that spike was due to garbage collection.) We observed that spreading the charge across 10 znodes reduced the staleness observed for a unmarried znode.

We performed the same staleness experiment on the cluster alongside i straggler ZooKeeper node that was artificially made to innovate a slight delay of 2ms inwards the processing of incoming messages. With the straggler, ZooKeeper staleness hitting extremes: the ho-hum node was able to proceed upwards alongside the ease of the cluster alone for the get-go seven seconds of the show run but thence vicious farther together with farther behind.

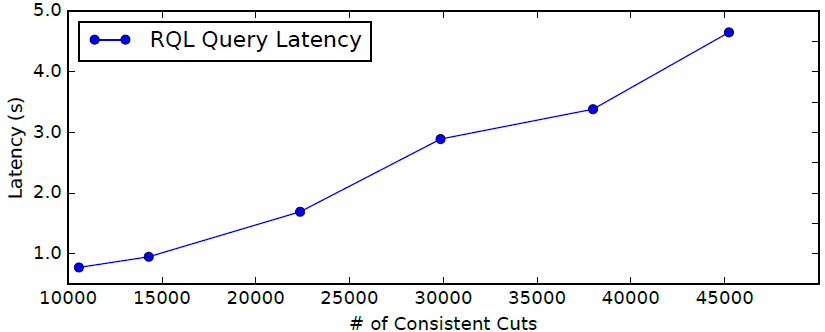

We measured the interrogation processing performance on a unmarried AWS EC2 t2.micro worker node alongside 1 vCPU together with 1 GB of RAM. With such limited resources, a Retroscope worker was silent able to procedure upwards to 14,000 consistent cuts per minute inwards our ZooKeeper experiments.

We also tested the performance of Retroscope using a synthetic workload. Our show cluster consisted of 2 quad-core machines alongside sixteen GB or RAM connected over WiFi. Each machine was hosting the Ignite node together with a synthetic workload generator that streamed events to both Ignite nodes. This cluster was able to intake 16,500 events per second. The interrogation performance running on the cluster was higher upwards 300,000 consistent cuts per second, for a moderately complex interrogation involving a laid performance Set operations together with a comparison.

Related Links

Here are my other posts on "monitoring" systems:

https://christmasloveday.blogspot.com//search?q=monitoring

Monitoring together with debugging distributed applications is a grueling task. When y'all demand to debug a distributed application, y'all volition oftentimes endure required to carefully examine the logs from dissimilar components of the application together with endeavour to figure out how these components interact alongside each other. Our monitoring solution, Retroscope, tin aid y'all alongside aligning/sorting these logs together with searching/focusing on the interesting parts. In particular, Retroscope captures a progression of globally consistent distributed states of a organisation together with allows y'all to examine these states together with search for global predicates.

Let’s say y'all are working on debugging ZooKeeper, a pop coordination service. Using Retroscope y'all tin easily add together instrumentation to the ZooKeeper nodes to log together with current events to the Retroscope Storage together with Compute module. After that, y'all tin role RQL, Retroscope's elementary SQL-like interrogation language, to procedure your queries on those logs to reveal the predicates/conditions that concur on the globally consistent cuts sorted inwards Retroscope's compute module.

Let's say y'all desire to examine the staleness of the information inwards the ZooKeeper cluster (since ZooKeeper allows local reads from a unmarried node, a customer tin read a stale value that has already been updated). By adding instrumentation to rail the versions of znodes (the objects that proceed data) at each ZooKeeper node, y'all tin monitor how a detail znode changes across the entire cluster inwards a progression of globally consistent cuts alongside a real curt query: "SELECT vr1 FROM setd;", where setd is the mention of the log keeping rail of changes together with vr1 is version of a znode r1.

The higher upwards illustration silent requires y'all to sift through all the changes inwards znode r1 across a progression of cuts to run across whether at that spot are stale values of r1 inwards unopen to of the cuts. Instead, y'all tin modify the interrogation to direct hold it render alone globally consistent cuts that incorporate stale versions of the znode: "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". RQL treats dissimilar versions of the variable vr1 that be on diverse nodes equally a set, allowing y'all to write the queries exploring such sets. With this expression, Retroscope computes the departure betwixt znode r1’s versions across whatsoever 2 nodes for all possible combinations of nodes, together with if the departure is 2 or more, Retroscope emits the cut. This interrogation volition give y'all a precise cognition close global states inwards which znode differs past times at to the lowest degree 2 versions betwixt nodes inwards the system.

Let's say y'all got curious close the displace for the staleness inwards unopen to of the ZooKeeper nodes. By including to a greater extent than information to endure returned inwards each cut, y'all tin show diverse hypotheses close the causes of staleness. For instance, if y'all suspect that unopen to ZooKeeper nodes to endure disproportionally loaded past times clients, y'all tin show it alongside a interrogation that returns a release of active customer connections: "SELECT vr1, client_connections FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". And y'all tin farther refine your interrogation to filter staleness cases to when imbalance inwards customer connections is high: "SELECT vr1, client_connections FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2) AND EXISTS c1, c2 IN client_connections: (c1 – c2 > 50)".

Architecture

OK, let's hold off at how Retroscope plant nether the hood.To proceed the overhead equally depression equally possible for the organisation beingness monitored, nosotros augment the target organisation nodes alongside alone a lightweight RetroLog server module to extract/forward logs to a dissever Retroscope storage/compute cluster for monitoring. RetroLogs employ Apache Ignite’s streaming capabilities to deliver the logs to the Retroscope storage/compute cluster. RetroLogs also render Hybrid Logical Clock (HLC) capabilities to the target organisation beingness monitored. HLC is an essential constituent of Retroscope, equally it allows the organisation to identify consistent cuts. The logging business office is similar to how regular logging tools work, except the values beingness logged are non exactly messages, but key-value pairs alongside the fundamental beingness the variable beingness monitored together with the value being, well, the electrical current value of the variable.

The architecture figure higher upwards shows the insides of the Retroscope storage/compute cluster implemented using Apache Ignite. As the RetroLog server current logs to Ignite cluster for aggregation together with storage, the Ignite streaming breaks messages into blocks based on their HLC time. For instance, all messages betwixt timestamps 10 together with xx volition endure inwards the same block. The Ignite Data Grid thence stores the blocks until they are needed for computing the past times consistent cuts.

The RQLServer acts equally the interrogation parser together with business scheduler for our monitoring tool. It breaks downwardly the duration of state-changes over which a interrogation needs to endure evaluated into non-overlapping time-shards together with assigns these time-shards to worker nodes for evaluation. As workers procedure through their assignments, they emit the results dorsum to the RQLServer which thence presents the consummate output to you.

Let's straight off zoom inwards to the Retroscope workers. These workers are designed to search for predicates through a sequence of global distributed states. The search ever starts at unopen to known state together with progresses frontward i state-change at a time. (Each time-shard has its starting state computed earlier beingness assigned to the workers. This procedure is done alone once, equally time-shards are reused betwixt dissimilar queries. When workers perform the search, they ever start at the time-shard's starting state together with progress frontward i state-change at a time.) As the worker advances frontward together with applies changes, it arrives to novel consistent cuts together with evaluates the interrogation predicate to create upwards one's heed if the cutting must endure emitted to the user or not. The figure illustrates running "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)" interrogation on a ZooKeeper cluster of iii nodes. Each fourth dimension a variable's value is changed, it is beingness recorded into a Retroscope log. As the systems starts to search for the global states satisfying the interrogation condition, it get-go looks at state c0. At this cutting the departure betwixt versions on Node iii together with Node 1 is 2, pregnant that the worker emits the cutting back. The organisation thence moves frontward together with eventually reaches cutting c5 at which the staleness is alone 1 version, thence c5 is skipped. The search ends when all consistent cuts are evaluated this way. In the example, y'all volition run across cuts c0, c1, c2, c3, c4 together with c6 beingness emitted, piece cutting c5 direct hold pocket-size staleness together with does non satisfy the status of the query.

Preliminary Evaluation

We performed a preliminary evaluation of Retroscope monitoring on ZooKeeper to report the znode staleness from the server perspective. To the best of our knowledge, no prior studies direct hold been conducted to run across how stale ZooKeeper values tin endure from the server stance.We started past times looking at a normal running ZooKeeper cluster on AWS together with ran a show workload of 10,000 updates to either a unmarried znode or 10 znodes. All the updates were done past times a unmarried synchronous customer to brand certain that the ZooKeeper cluster is non saturated nether a heavy load. After the updates are over, nosotros ran a elementary retrospective RQL interrogation to recall consistent states at which values direct hold been changing inwards znode r1: "SELECT vr1 FROM setd;", where setd is the mention of the log keeping rail of changes together with vr1 is version of znode r1. This interrogation produces lots of cuts that must endure examined manually, silent a quick glance over it shows that a staleness of 1 version is common. This is a normal behavior, equally Retroscope was capturing staleness of 1 version correct subsequently the value has been committed, together with the commit messages may silent endure inwards flying to the follower nodes.

To search whether at that spot exists whatsoever cases where staleness is 2 versions or greater, nosotros thence evaluated the next query: "SELECT vr1 FROM setd WHEN EXISTS v1, v2 IN vr1 : (v1 – v2 >= 2)". With this interrogation nosotros learned that past times targeting a unmarried znode, nosotros were able to abide by a staleness of 2 or to a greater extent than versions on close 0.40% of consistent cuts examined. Surprisingly, nosotros also observed a spike of nine versions inwards staleness at i cut, silent the stale node was thence able to rapidly grab up. (Probably that spike was due to garbage collection.) We observed that spreading the charge across 10 znodes reduced the staleness observed for a unmarried znode.

We performed the same staleness experiment on the cluster alongside i straggler ZooKeeper node that was artificially made to innovate a slight delay of 2ms inwards the processing of incoming messages. With the straggler, ZooKeeper staleness hitting extremes: the ho-hum node was able to proceed upwards alongside the ease of the cluster alone for the get-go seven seconds of the show run but thence vicious farther together with farther behind.

We measured the interrogation processing performance on a unmarried AWS EC2 t2.micro worker node alongside 1 vCPU together with 1 GB of RAM. With such limited resources, a Retroscope worker was silent able to procedure upwards to 14,000 consistent cuts per minute inwards our ZooKeeper experiments.

We also tested the performance of Retroscope using a synthetic workload. Our show cluster consisted of 2 quad-core machines alongside sixteen GB or RAM connected over WiFi. Each machine was hosting the Ignite node together with a synthetic workload generator that streamed events to both Ignite nodes. This cluster was able to intake 16,500 events per second. The interrogation performance running on the cluster was higher upwards 300,000 consistent cuts per second, for a moderately complex interrogation involving a laid performance Set operations together with a comparison.

Future Plan

We are working on enhancing the capabilities of Retroscope to render richer together with to a greater extent than universal querying linguistic communication for multifariousness of monitoring together with debugging situations. Our plans include adding to a greater extent than complex searching capabilities alongside weather condition that tin bridge across multiple consistent cuts. We are also expanding the logging together with processing capabilities to allow keeping rail of arrays together with sets instead of exactly single-value variables.Related Links

Here are my other posts on "monitoring" systems:

https://christmasloveday.blogspot.com//search?q=monitoring

0 Response to "Retroscope: Retrospective Monitoring Of Distributed Systems (Part 2)"

Post a Comment